This Google Ads script uses GPT to summarize account performance

Last month, I shared my first GPT-enabled Google Ads script. It identifies missing RSA headlines and suggests new variants.

This month, I wanted to push the limits of GPT a little harder and see if I could get it to write my next script for me. Spoiler alert: It worked! But it needed some handholding to get there. I’ll teach you how I engineered the prompt to get a successful result.

The script I am sharing uses OpenAI’s GPT to write an account performance summary along with some suggestions for how to improve the performance of a Google Ads account.

Making PPC reports more descriptive

PPC reporting can be a tedious task. By nature, it’s also repetitive because clients and stakeholders expect the latest report in their inbox regularly – be it weekly, monthly, or, heaven forbid, even daily.

There are plenty of great reporting tools (I work for one). While they can automate pulling in the data and visualizing it, making sense of and telling a story with the data usually still require a human’s touch. GPT excels at writing compelling stories, so it seemed like a good solution for my problem.

GPT and generative AI are adept at producing well-written text. Because large language models (LLMs) have read billions of words, they’re very good at predicting how to put words together in a way that makes for a compelling read.

But as compelling as they may be, they’re not always true, and that’s a big problem when the goal is to share trustworthy reports with clients.

So I set out to figure out if I could force GPT to be correct and a great storyteller about the data in an ads account.

GPT’s truth problem

A weakness of GPT is that its core strength is predicting the next word in a string. It’s much less reliable when it comes to fact-checking and ensuring what it says is correct.

Its training might have included dozens of blog posts about how to get more conversions in Google Ads.

Because those articles probably frequently mention tasks like checking budgets and managing CPA targets, GPT will likely include those things when it generates advice related to getting more conversions.

But it may get the details slightly wrong, like whether an advertiser whose CPA is lower than the target CPA should increase or decrease their ad budget. GPT isn’t solving a problem analytically but rather predicting the words to include in its advice.

Another problem is that GPT remains bad at math despite openAI’s work to address this known problem.

For example, if provided with facts like how many clicks and impressions a campaign has, it’s not safe to assume that it will know how to determine the correct CTR from this information. We all know it’s a simple formula: clicks/impressions = CTR.

Sometimes GPT will get it right, but there’s no guarantee.

To avoid calculation errors, I decided it would be safer to do the math myself and provide the results in the prompt.

Rather than trusting GPT to calculate metrics like CTR, conversion rate, etc., correctly, I provided the values for those metrics in the prompt.

How to provide GPT with facts about your business

The specific task I wanted to automate was describing how an account’s performance changed last month compared to the month before and including some optimization suggestions.

When creating this automation, I couldn’t jump straight into the code. I had to manually create a process that worked before turning that process into an automation.

The first step was to experiment with GPT to determine what data it needed so it would stop making up facts and instead rely on the truth for crafting its stories. This required giving it Google Ads data with the facts I wanted it to describe.

Fortunately, GPT can take a table as input and figure out how to interpret the various cells. So I created a table of campaign performance and exported it as a CSV text file which could be copied and pasted into a GPT prompt.

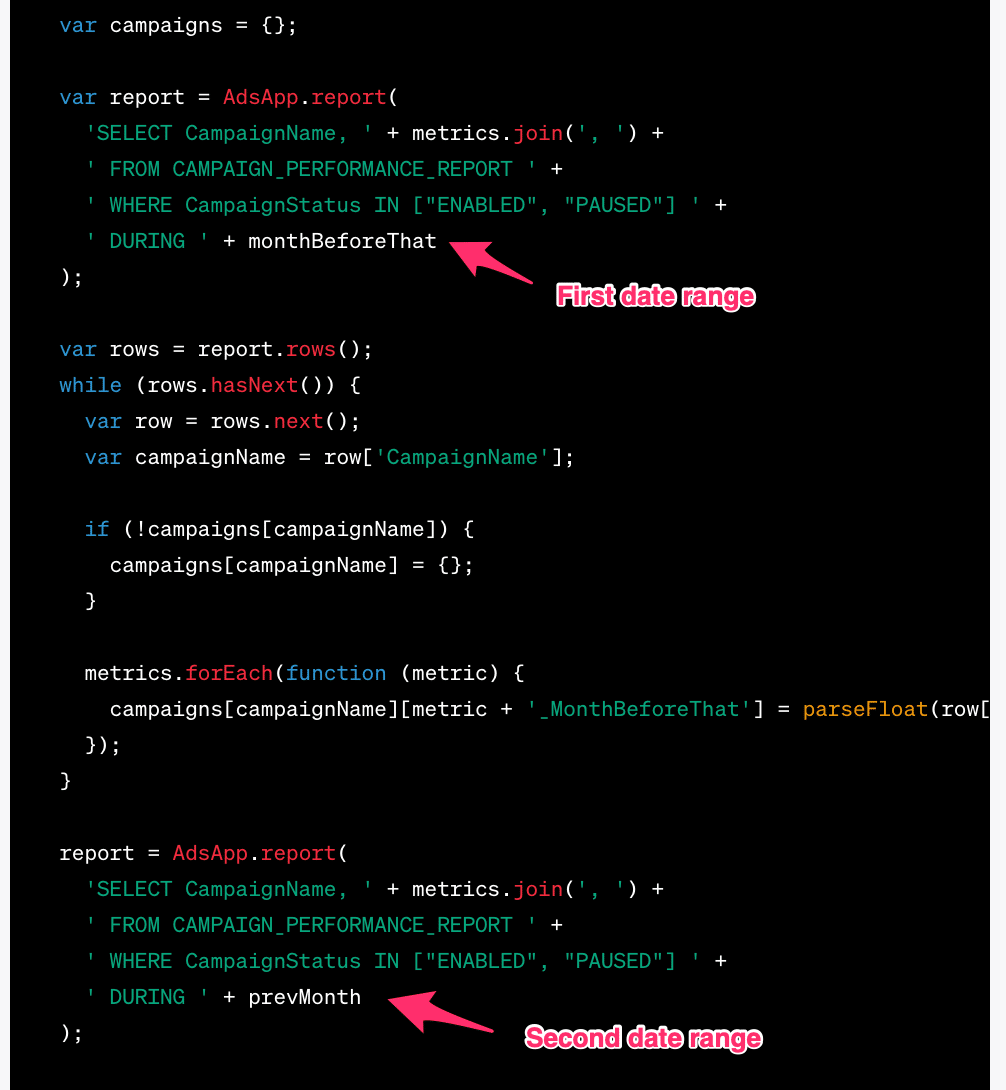

Because I wanted GPT to comment on the changes in performance between two date ranges, I initially brought in two separate CSV strings, one for each period.

But two separate CSV strings use more tokens than the same data combined into a single CSV with separate columns for different date ranges.

So to make the automation slightly better at working with bigger accounts, I generated the combined CSV string.

With factual data ready to insert into a prompt, I could then move on to engineer the prompt to give me the best results possible.

Prompt engineering

With factual data to work with, I next needed to tell GPT what to do with those facts. The prompt could be as simple as:

- “Write a summary of the campaigns’ performance comparing the two periods.”

GPT is smart and figures out what the different periods in the CSV data are.

If it tends to focus too much on certain metrics you’d like to deprioritize, add more detail to the prompt, like:

- “Do not include Search Lost IS in the summary.”

Next, I wanted it to include some optimization tips. To make the suggestions more reliable and more in line with my own management style, I loaded the prompt with some additional facts like these:

- The target CPA is $20. A cost higher is bad, and a cost lower is good.

- If the Search lost IS (budget) > 10% and the CPA is below the target, the budget should be raised.

- if the CPA is above the target, the bids should be adjusted.

Then when sending a very detailed prompt with CSV data, facts, and a request for what to do with this data, GPT started giving solid answers.

With all the puzzle pieces in place, it was time to ask GPT to write me the automation.

Getting GPT to write Ads scripts

The code for a Google Ads script to pull data from an account isn’t particularly complicated. It’s part of almost any script and very well documented.

So I crossed my fingers and asked GPT to write a script to pull the data for me with this prompt:

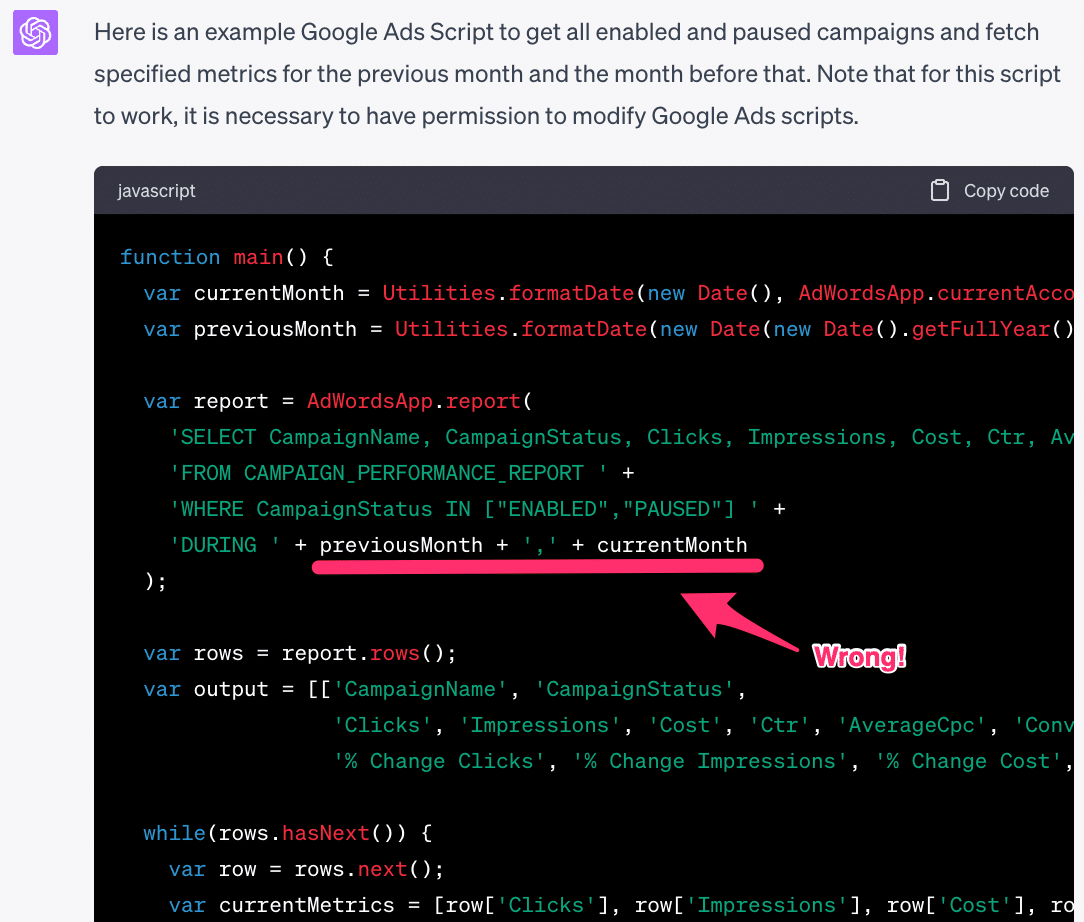

The response looked like a good script, but one thing seemed a bit off. It was writing a GAQL query that included the two date ranges I wanted to compare in a single query. That is not supposed to work.

So I asked GPT to try again, and while the implementation changed slightly, it once again messed up the date ranges in the GAQL query:

At this point, I could have given up and fixed the code myself, but instead, I did some prompt engineering.

What if my prompt was confusing GPT?

I told it to:

- “Get the clicks, impressions, cost, CTR, average CPC, conversions, conversion rate, and cost per conversion metrics for the previous month and the month before that.”

Could I be clearer and tell it this should be done in two separate queries that would be merged later?

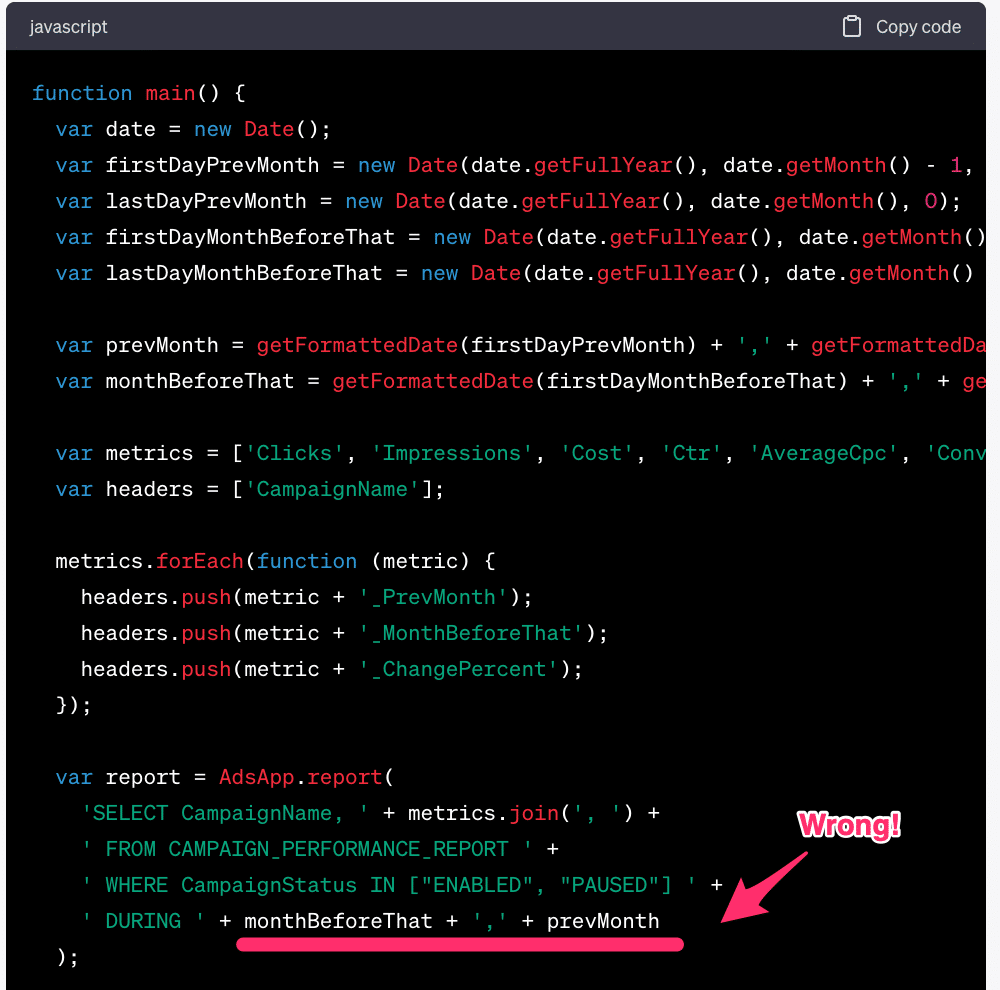

So I changed the prompt to include this new text:

- “Get the clicks, impressions, cost, CTR, average CPC, conversions, conversion rate, and cost per conversion metrics. Get the report for 2 date ranges: last month and the month before that. build a map where the key is the campaign name and it includes the stats from the 2 date ranges.”

This is much more precise, and the result came back as follows:

Now GPT was writing the correct code. After installing it in my Google Ads account, it immediately worked as expected and generated the needed CSV data.

This was a good lesson in prompt engineering for me. If you hire a new team member who’s never done PPC, you probably need to be pretty precise in your instructions when you ask for help. It’s the same with GPT, precision matters!

Also, it still matters to be a subject matter expert. Someone who’s never worked with GAQL or API reports from Google Ads might not know that you can’t get data for two date ranges in a single call. Without that knowledge, finding the error in the GPT response could be very difficult.

Bottom line, when asking GPT to generate code, it’s helpful to write pseudo-code rather than being too general and only telling it what outputs you expect. The more you tell the system how to arrive at that output, the more likely it will write code that works.

With the code to pull CSV data working, I now needed some code to send that data to GPT to ask for a summary.

Using GPT in Google Ads scripts

To use GPT in a script, you need API access and an API token. You can sign up for this on the OpenAI website. With that, you can write a simple function that calls the API with a prompt, gets the response, and prints it on the screen.

This code could be requested from GPT, but I already had it from last month’s RSA script so I just reused that.

Here’s the code snippet to use GPT in Google Ads scripts

Putting it together

Next, I put the two scripts above together. The first script gets the data I need for my prompt, and the second script sends that data as a prompt to GPT and captures the response, which is then rendered on screen.

Grab a copy of the complete code here and remember to add your own API key to start using it:

Then you should experiment with the facts and prompt. The line in the code where you enter facts should include details you want GPT to know, such as:

- What your target is.

- Whether a number higher or lower than the target is good or bad.

- Facts about your account optimization methodology (i.e., what you would recommend doing if the CPA is too high and the impressions have decreased).

GPT will pull from the facts you provided rather than making stuff up when it summarizes the performance.

You can also engineer the prompt to do things the way you want.

For example, you could ask GPT to include or exclude particular metrics in its summary or tell it what style to write in, e.g., conversational or business-oriented.

Remember that this script uses the OpenAI API, which is not free. So every time you run this, it will cost money.

I recommend running this script as needed and not putting it on an automated schedule.

Summarizing PPC performance with GPT

GPT is excellent at writing but can have problems with factual correctness. That is why providing as many facts as possible in the prompts is helpful.

By using a Google Ads script, facts about account performance can automatically be prepared in a format that works with GPT.

Use this script to provide GPT with facts about your account and get a performance summary that can be shared with clients and stakeholders.

I encourage you to check it out and let me know what you think.

The post This Google Ads script uses GPT to summarize account performance appeared first on Search Engine Land.

from Search Engine Land https://searchengineland.com/google-ads-script-gpt-summarize-account-performance-427822

via free Seo Tools